Running serverless is fun, cost-effective and scalable especially if your application is still growing. But at the same time I found testing lambdas locally to be a pain, especially if you want to test your application integration with SQS or SNS. In this article, I will share my take on how I did it to test my applications.

Problem Statement

I have created many microservices using NestJS as my core framework. Each of my microservice is designed to handle one business feature (e.g. each microservice will have GET/POST/PATCH/PUT/DELETE per business entity).

Logical grouping helps us keep our microservices small and at the same time prevents us from service explosion, thus letting us do a population control of microservices.

As a part of logical grouping, in my microservice, I am also handling SQS/SNS messages within the same lambda.

Solution

Since my aim was to test the events on my local server, here is what I will do.

Create a lambda which routes all the events to my web application. Setup SQS with this as target lambda, simple.

You can skip the initial setup and jump directly to SQS & Lambda integration

Setting up sample NestJS app

I am using a nodejs application but the idea can be leveraged across any other language. I will create a sample lambda function using NestJS, for details refer set up a nestJs application Once the NestJS application is set up, we need to add wrappers to convert it into lambda function, for which we will add serverless dependencies Then we add handlers

So now our lambda is all setup to be deployed on aws. In this article, I am not going in details on how to deploy it on aws, but you can refer this article to do via serverless framework

SQS message handler

If the lambda event contains we will be processing the received messages. For simplicity, I am just logging the message event, in real world application, you would have a factory which will handle each type of messages.

The modified handler will look something like this.

import { NestFactory } from "@nestjs/core";

import { AppModule } from "./app.module";

import serverlessExpress from "@vendia/serverless-express";

import { Callback, Context, Handler } from "aws-lambda";

let server: Handler;

async function bootstrap() {

const app = await NestFactory.create(AppModule);

await app.init();

const expressApp = app.getHttpAdapter().getInstance();

return serverlessExpress({ app: expressApp });

}

const handleMessage = (messages: any[]) => {

messages.forEach(message => console.log(`Message received: ${message}`))

}

export const handler: Handler = async (event: any, context: Context, callback: Callback) => {

if (event.Records) {

handleMessage(event.Records);

return

}

server = server ?? (await bootstrap());

return server(event, context, callback);

};

Here I have a handleMessage function which is just printing all the records.

Now our application is ready to receive messages

Setup Localstack

First thing we need to install Localstack on my local, I will be using a docker image for it.

So create a docker-compose.yml to initialize localstack

version: "3.7"

services:

localstack:

container_name: "${LOCALSTACK_DOCKER_NAME-localstack_main}"

image: localstack/localstack:1.2.0

network_mode: bridge

ports:

- "127.0.0.1:53:53" # only required for Pro (DNS)

- "127.0.0.1:53:53/udp" # only required for Pro (DNS)

- "127.0.0.1:443:443" # only required for Pro (LocalStack HTTPS Edge Proxy)

- "127.0.0.1:4510-4559:4510-4559" # external service port range

- "127.0.0.1:4566:4566" # LocalStack Edge Proxy

environment:

- SERVICES=${SERVICES-}

- DEBUG=${DEBUG-}

- DATA_DIR=${DATA_DIR-}

- LAMBDA_EXECUTOR=${LAMBDA_EXECUTOR-}

- LOCALSTACK_API_KEY=${LOCALSTACK_API_KEY-} # only required for Pro

- HOST_TMP_FOLDER=${TMPDIR:-/tmp/}localstack

- DOCKER_HOST=unix:///var/run/docker.sock

volumes:

- "${TMPDIR:-/tmp}/localstack:/tmp/localstack"

- "/var/run/docker.sock:/var/run/docker.sock"

- ./localstack_bootstrap:/docker-entrypoint-initaws.d/

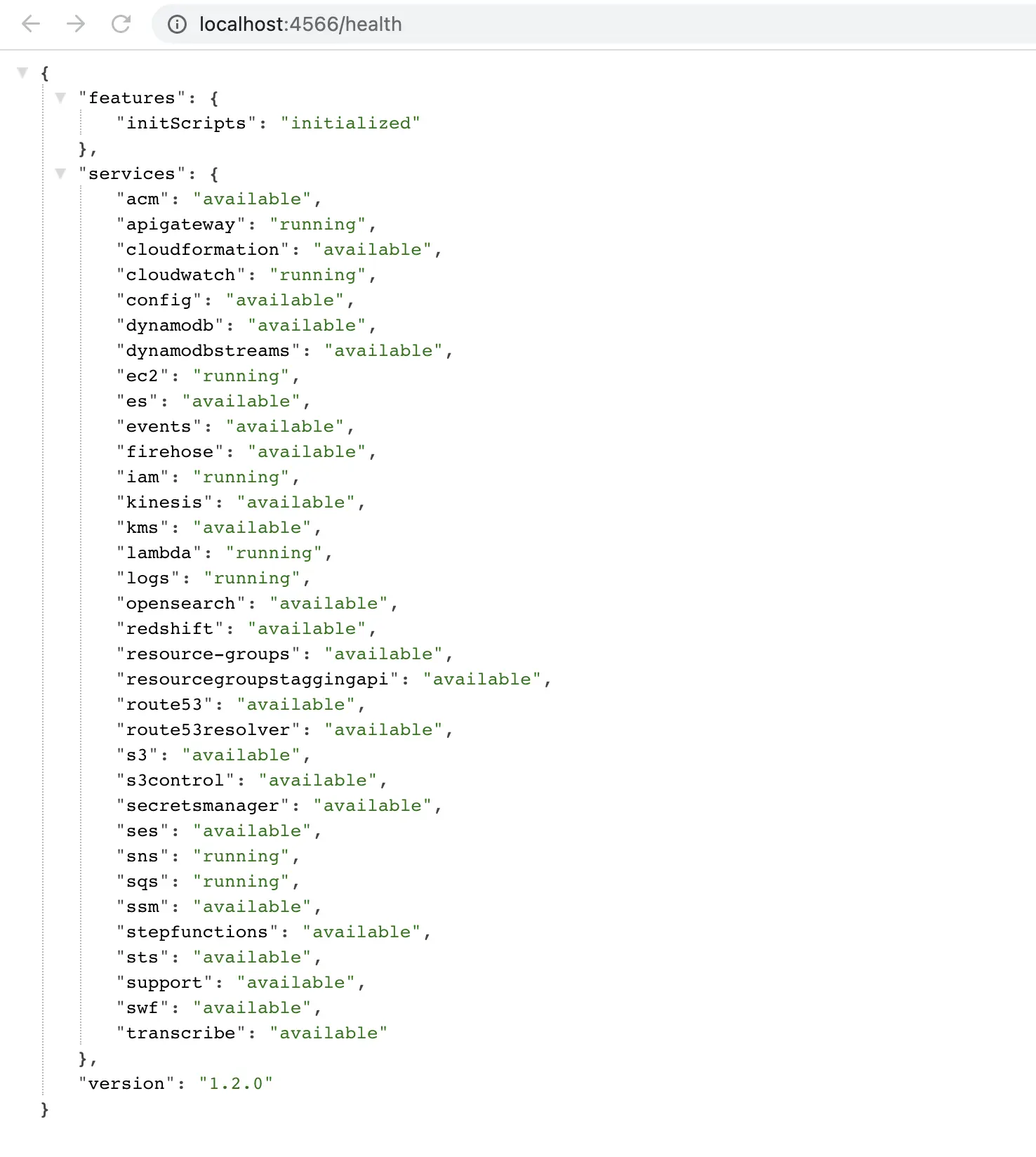

now when do docker-compose up, this will download the localstack (v1.2.0) and start it. To verify weather the service are up and running,

just to go http://localhost:4566/health in your browser, it would be something like this.

Install Terraform

I will be using Terraform to set up my infrastructure. To install follow the instructions given here

SQS & Lambda Integration

This is main section where all the magic happens.

Setup Terraform Provider

We need to setup localstack as out aws provider, to do this, we will override the endpoints and provide random secrets.

provider "aws" {

access_key = "test"

secret_key = "test"

region = "us-east-1"

s3_force_path_style = false

skip_credentials_validation = true

skip_metadata_api_check = true

skip_requesting_account_id = true

endpoints {

apigateway = "http://localhost:4566"

apigatewayv2 = "http://localhost:4566"

cloudformation = "http://localhost:4566"

cloudwatch = "http://localhost:4566"

dynamodb = "http://localhost:4566"

ec2 = "http://localhost:4566"

es = "http://localhost:4566"

elasticache = "http://localhost:4566"

firehose = "http://localhost:4566"

iam = "http://localhost:4566"

kinesis = "http://localhost:4566"

lambda = "http://localhost:4566"

rds = "http://localhost:4566"

redshift = "http://localhost:4566"

route53 = "http://localhost:4566"

s3 = "http://s3.localhost.localstack.cloud:4566"

secretsmanager = "http://localhost:4566"

ses = "http://localhost:4566"

sns = "http://localhost:4566"

sqs = "http://localhost:4566"

ssm = "http://localhost:4566"

stepfunctions = "http://localhost:4566"

sts = "http://localhost:4566"

}

}

Helper Lambda

We will create a lambda which will forward the event to my application.

This is very simple lambda, create in file lambda/src/main.ts

import axios from 'axios';

exports.handler = async (event, context) => {

const body = {event: event, context: context}

try {

const axiosResponse = await axios.post(process.env.URL, body);

return {

statusCode: 200,

headers: {'Content-Type': 'application/json'},

body: axiosResponse.data?.body || axiosResponse.data

}

} catch (e) {

return {

statusCode: 500,

headers: {'Content-Type': 'application/json'},

body: JSON.stringify(e.message)

}

}

}

We are using axios to make post request to the given url.

I created a script to compile the application scripts/build.sh

#!/bin/bash

rm -rf lambda_function.zip

pushd lambda || exit 1

npm install

npm run build

popd || exit 1

Creating lambda in localstack

I am creating lambda.tf, in this file,

- we will create

aws_iam_rolefor this lambda. - We will use

null_resourceto execute the above script created, it will be triggered ifmain.tsgets modified - Then we create a zip file for our lambda using

archive_file - Finally, we create lambda by the name of helper lambda

aws_lambda_function. We are also passing the environment variable here.

resource "aws_iam_role" "iam_for_lambda" {

name = "iam_for_lambda"

assume_role_policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Action": "sts:AssumeRole",

"Principal": {

"Service": "lambda.amazonaws.com"

},

"Effect": "Allow",

"Sid": ""

}

]

}

EOF

}

resource "null_resource" "build" {

triggers = {

versions = filesha256("./lambda/src/main.ts")

}

provisioner "local-exec" {

command = "/bin/bash ./scripts/build.sh"

}

}

data "archive_file" "lambda_zip" {

type = "zip"

source_dir = "./lambda"

output_path = "lambda_function.zip"

excludes = ["./lambda/src"]

depends_on = [null_resource.build]

}

resource "aws_lambda_function" "lambda" {

filename = "lambda_function.zip"

function_name = "helper-lambda"

role = aws_iam_role.iam_for_lambda.arn

handler = "dist/main.handler"

source_code_hash = filebase64sha256("lambda_function.zip")

runtime = "nodejs16.x"

environment {

variables = {

URL = var.url

}

}

depends_on = [data.archive_file.lambda_zip]

}

SQS

Next we will create the queue and the helper-lambda as its trigger, so that when ever the message is received by SQS it would trigger the lambda with that message.

aws_sqs_queuecreates a queue with name ‘test-queue’, we need to keepvisibility_timeout_secondshigh since we need to make sure that the message doesn’t get replayed.aws_lambda_event_source_mappingsets up the lambda function as a target to our sqs queue.

resource "aws_sqs_queue" "test-queue" {

name = "test-queue"

visibility_timeout_seconds = 600

}

resource "aws_lambda_event_source_mapping" "test_queue_lambda_mapping" {

batch_size = 10

event_source_arn = aws_sqs_queue.test-queue.arn

enabled = true

function_name = aws_lambda_function.lambda.function_name

}

variable.tf

Finally, we create a variable.tf to store variables, in this case I want to create variable named url which I am using in my lambda environment variable.

variable "url" {

type = string

description = "Url for forwarding the event"

default = "http://host.docker.internal:3001/lambda-handler"

}

Notice I am using host.docker.internal instead of localhost, it’s because the lambda would be running inside my docker,

and to access my host ip from within docker container I use ‘host.docker.internal’.

So, our terraform script are ready.

now we will do:

terraform init, it will download module dependencies for aws.-

terraform plan, this will generate details of the resources to be created. In this case it will be like this.$ terraform plan Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols: + create <= read (data resources) Terraform will perform the following actions: # data.archive_file.lambda_zip will be read during apply # (depends on a resource or a module with changes pending) <= data "archive_file" "lambda_zip" { + excludes = [ + "./lambda/src", ] + id = (known after apply) + output_base64sha256 = (known after apply) + output_md5 = (known after apply) + output_path = "lambda_function.zip" + output_sha = (known after apply) + output_size = (known after apply) + source_dir = "./lambda" + type = "zip" } # aws_iam_role.iam_for_lambda_tf will be created + resource "aws_iam_role" "iam_for_lambda" { + arn = (known after apply) + assume_role_policy = jsonencode( { + Statement = [ + { + Action = "sts:AssumeRole" + Effect = "Allow" + Principal = { + Service = "lambda.amazonaws.com" } + Sid = "" }, ] + Version = "2012-10-17" } ) + create_date = (known after apply) + force_detach_policies = false + id = (known after apply) + managed_policy_arns = (known after apply) + max_session_duration = 3600 + name = "iam_for_lambda" + name_prefix = (known after apply) + path = "/" + tags_all = (known after apply) + unique_id = (known after apply) + inline_policy { + name = (known after apply) + policy = (known after apply) } } # aws_lambda_event_source_mapping.test_queue_lambda_mapping will be created + resource "aws_lambda_event_source_mapping" "test_queue_lambda_mapping" { + batch_size = 10 + enabled = true + event_source_arn = (known after apply) + function_arn = (known after apply) + function_name = "helper-lambda" + id = (known after apply) + last_modified = (known after apply) + last_processing_result = (known after apply) + maximum_record_age_in_seconds = (known after apply) + maximum_retry_attempts = (known after apply) + parallelization_factor = (known after apply) + state = (known after apply) + state_transition_reason = (known after apply) + uuid = (known after apply) + amazon_managed_kafka_event_source_config { + consumer_group_id = (known after apply) } + self_managed_kafka_event_source_config { + consumer_group_id = (known after apply) } } # aws_lambda_function.lambda will be created + resource "aws_lambda_function" "lambda" { + architectures = (known after apply) + arn = (known after apply) + filename = "lambda_function.zip" + function_name = "helper-lambda" + handler = "dist/main.handler" + id = (known after apply) + invoke_arn = (known after apply) + last_modified = (known after apply) + memory_size = 128 + package_type = "Zip" + publish = false + qualified_arn = (known after apply) + qualified_invoke_arn = (known after apply) + reserved_concurrent_executions = -1 + role = (known after apply) + runtime = "nodejs16.x" + signing_job_arn = (known after apply) + signing_profile_version_arn = (known after apply) + source_code_hash = "1" + source_code_size = (known after apply) + tags_all = (known after apply) + timeout = 3 + version = (known after apply) + environment { + variables = { + "URL" = "http://localhost:3001/lambda-handler" } } + ephemeral_storage { + size = (known after apply) } + tracing_config { + mode = (known after apply) } } # aws_sqs_queue.test-queue will be created + resource "aws_sqs_queue" "test-queue" { + arn = (known after apply) + content_based_deduplication = false + deduplication_scope = (known after apply) + delay_seconds = 0 + fifo_queue = false + fifo_throughput_limit = (known after apply) + id = (known after apply) + kms_data_key_reuse_period_seconds = (known after apply) + max_message_size = 262144 + message_retention_seconds = 345600 + name = "test-queue" + name_prefix = (known after apply) + policy = (known after apply) + receive_wait_time_seconds = 0 + redrive_allow_policy = (known after apply) + redrive_policy = (known after apply) + sqs_managed_sse_enabled = (known after apply) + tags_all = (known after apply) + url = (known after apply) + visibility_timeout_seconds = 300 } # null_resource.build will be created + resource "null_resource" "build" { + id = (known after apply) + triggers = { + "versions" = "ffef874951cd9c637c7d1699b1604f6b6c5f957a14ca3bc9686bb44731fff0e9" } } Plan: 5 to add, 0 to change, 0 to destroy. Changes to Outputs: + lambda = (known after apply) + test-queue = (known after apply) ╷ │ Warning: Argument is deprecated │ │ with provider["registry.terraform.io/hashicorp/aws"], │ on provider.tf line 5, in provider "aws": │ 5: s3_force_path_style = false │ │ Use s3_use_path_style instead. │ │ (and one more similar warning elsewhere) ╵ ╷ │ Warning: Attribute Deprecated │ │ with provider["registry.terraform.io/hashicorp/aws"], │ on provider.tf line 5, in provider "aws": │ 5: s3_force_path_style = false │ │ Use s3_use_path_style instead. │ │ (and one more similar warning elsewhere) ╵ ───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────── Note: You didn't use the -out option to save this plan, so Terraform can't guarantee to take exactly these actions if you run "terraform apply" now. -

terraform apply --auto-approveto finally create the resources.null_resource.build: Creating... null_resource.build: Provisioning with 'local-exec'... null_resource.build (local-exec): Executing: ["/bin/sh" "-c" "/bin/bash ./scripts/build.sh"] null_resource.build (local-exec): ~/Documents/github/blog-code/terraform/sqs-lambda-integration-localstack/lambda ~/Documents/github/blog-code/terraform/sqs-lambda-integration-localstack aws_iam_role.iam_for_lambda: Creating... aws_sqs_queue.test-queue: Creating... aws_iam_role.iam_for_lambda: Creation complete after 0s [id=iam_for_lambda] null_resource.build (local-exec): up to date, audited 322 packages in 2s null_resource.build (local-exec): 41 packages are looking for funding null_resource.build (local-exec): run `npm fund` for details null_resource.build (local-exec): found 0 vulnerabilities null_resource.build (local-exec): > [email protected] prebuild null_resource.build (local-exec): > rimraf dist null_resource.build (local-exec): > [email protected] build null_resource.build (local-exec): > npm run tsc null_resource.build (local-exec): > [email protected] tsc null_resource.build (local-exec): > tsc -p tsconfig.json && tsc -p tsconfig.build.json null_resource.build (local-exec): ~/Documents/github/blog-code/terraform/sqs-lambda-integration-localstack null_resource.build: Creation complete after 8s [id=5577006791947779410] data.archive_file.lambda_zip: Reading... aws_sqs_queue.test-queue: Still creating... [10s elapsed] data.archive_file.lambda_zip: Read complete after 8s [id=245dcc34cbcf937d81b543bffd855dcda246f144] aws_lambda_function.lambda: Creating... aws_sqs_queue.test-queue: Still creating... [20s elapsed] aws_lambda_function.lambda: Creation complete after 8s [id=helper-lambda] aws_sqs_queue.test-queue: Creation complete after 25s [id=http://localhost:4566/000000000000/test-queue] aws_lambda_event_source_mapping.test_queue_lambda_mapping: Creating... aws_lambda_event_source_mapping.test_queue_lambda_mapping: Creation complete after 0s [id=d6634787-8cc0-4366-aedc-cfd18ab75c9c] ╷ │ Warning: Argument is deprecated │ │ with provider["registry.terraform.io/hashicorp/aws"], │ on provider.tf line 5, in provider "aws": │ 5: s3_force_path_style = false │ │ Use s3_use_path_style instead. │ │ (and 2 more similar warnings elsewhere) ╵ ╷ │ Warning: Attribute Deprecated │ │ with provider["registry.terraform.io/hashicorp/aws"], │ on provider.tf line 5, in provider "aws": │ 5: s3_force_path_style = false │ │ Use s3_use_path_style instead. │ │ (and 2 more similar warnings elsewhere) ╵ Apply complete! Resources: 5 added, 0 changed, 0 destroyed. Outputs: lambda = "arn:aws:apigateway:us-east-1:lambda:path/2015-03-31/functions/arn:aws:lambda:us-east-1:000000000000:function:helper-lambda/invocations" test-queue = "http://localhost:4566/000000000000/test-queue"

And you have your lambda & sqs integration created.

Lambda handler in main app

Before, we start our test, we need to create a post controller in our main application, which will be triggered by our deployed lambda.

import {Controller, Post} from '@nestjs/common';

import {handler} from './lambda';

@Controller()

export class AppController {

@Post('/lambda-handler')

async lambdaHandler(@Body() reqeust: any) {

return await handler(reqeust.event, reqeust.context);

}

}

Conclusion

Time to test our integration, I am using aws cli to send the message to SQS.

$ aws sqs send-message --endpoint http://localhost:4566 --queue-url http://localhost:4566/000000000000/test-queue --message-body "hello"

# generated output

{

"MD5OfMessageBody": "5d41402abc4b2a76b9719d911017c592",

"MessageId": "2d0fc032-64ca-49a6-9f98-868854013942"

}

After a moment later, we can see the output in our main application’s log:

Message received: {"body":"hello","receiptHandle":"YjhjNDBlZGMtYjAxNi00YTNhLWFmZjEtZWU4MjI5YmE4NDZiIGFybjphd3M6c3FzOnVzLWVhc3QtMTowMDAwMDAwMDAwMDA6dGVzdC1xdWV1ZSAyZDBmYzAzMi02NGNhLTQ5YTYtOWY5OC04Njg4NTQwMTM5NDIgMTY2NzExOTA4My43NjI1NA==","md5OfBody":"5d41402abc4b2a76b9719d911017c592","eventSourceARN":"arn:aws:sqs:us-east-1:000000000000:test-queue","eventSource":"aws:sqs","awsRegion":"us-east-1","messageId":"2d0fc032-64ca-49a6-9f98-868854013942","attributes":{"SenderId":"000000000000","SentTimestamp":"1667119082819","ApproximateReceiveCount":"1","ApproximateFirstReceiveTimestamp":"1667119083762"},"messageAttributes":{}}

For me this architecture has reduced my efforts in testing SQS events integration with lambda(I am running more than 20 copies of this helper lambda for all my microservices endpoint)

I would surely love to listen to you as well, how are you testing SQS & Lambda in your local environment. You can find terraform & lambda code on github

If you liked this article, you can buy me a coffee

Leave a comment